My next project is about converting comics to my native language. Since manual work is a time consuming, we are discussing about automating this. So took my first comic, Doctor Strange(English), and lets talk about the steps involved in this.

Comics are a form of visual storytelling that have gained immense popularity over the years. However, one of the challenges in reading comics is that they are often published in a single language, making them inaccessible to people who don't understand that language. But with the help of deep learning, it is now possible to automate the language conversion of comics, making them accessible to a wider audience.

The process of automating language conversion of comics involves training deep neural networks to recognize and translate text from one language to another. The neural network is trained on a large dataset of comics and their translations to learn the relationship between the text and the images. Once trained, the neural network can be used to automatically translate the text in new comics.

One of the challenges in automating language conversion of comics is that the text is often integrated with the images. This means that the neural network needs to be able to recognize and extract the text from the images. One approach to addressing this challenge is to use Optical Character Recognition (OCR) technology, which can recognize and extract text from images.

Another challenge is that different languages may have different sentence structures and word orders, making it difficult for the neural network to accurately translate the text. To address this, the neural network can be trained on a larger dataset of translations to improve its accuracy.

One of the benefits of automating language conversion of comics is that it can be done automatically and in real-time. This makes it possible for publishers to translate their comics into multiple languages without the need for manual translation. It also makes comics more accessible to people who may not have access to translations, such as those living in remote areas or those with visual impairments.

However, there are also some limitations to this technology. One of the challenges is that the neural network may make errors in translating certain words or phrases, especially those with multiple meanings. Another challenge is that the translated text may not always fit seamlessly with the images, which can be distracting for readers.

Despite these challenges, the technology for automating language conversion of comics using deep learning is rapidly advancing. It is now possible to produce translated comics that are visually stunning and accurate in their translations. With further improvements to the technology, we may soon be able to enjoy comics in multiple languages, bringing new audiences to this beloved form of storytelling.

In conclusion, automating language conversion of comics using deep learning is a promising technology that has the potential to revolutionize the comic industry. By making comics more accessible to a wider audience, we can foster a greater appreciation for this unique form of storytelling and bring people together across language barriers.

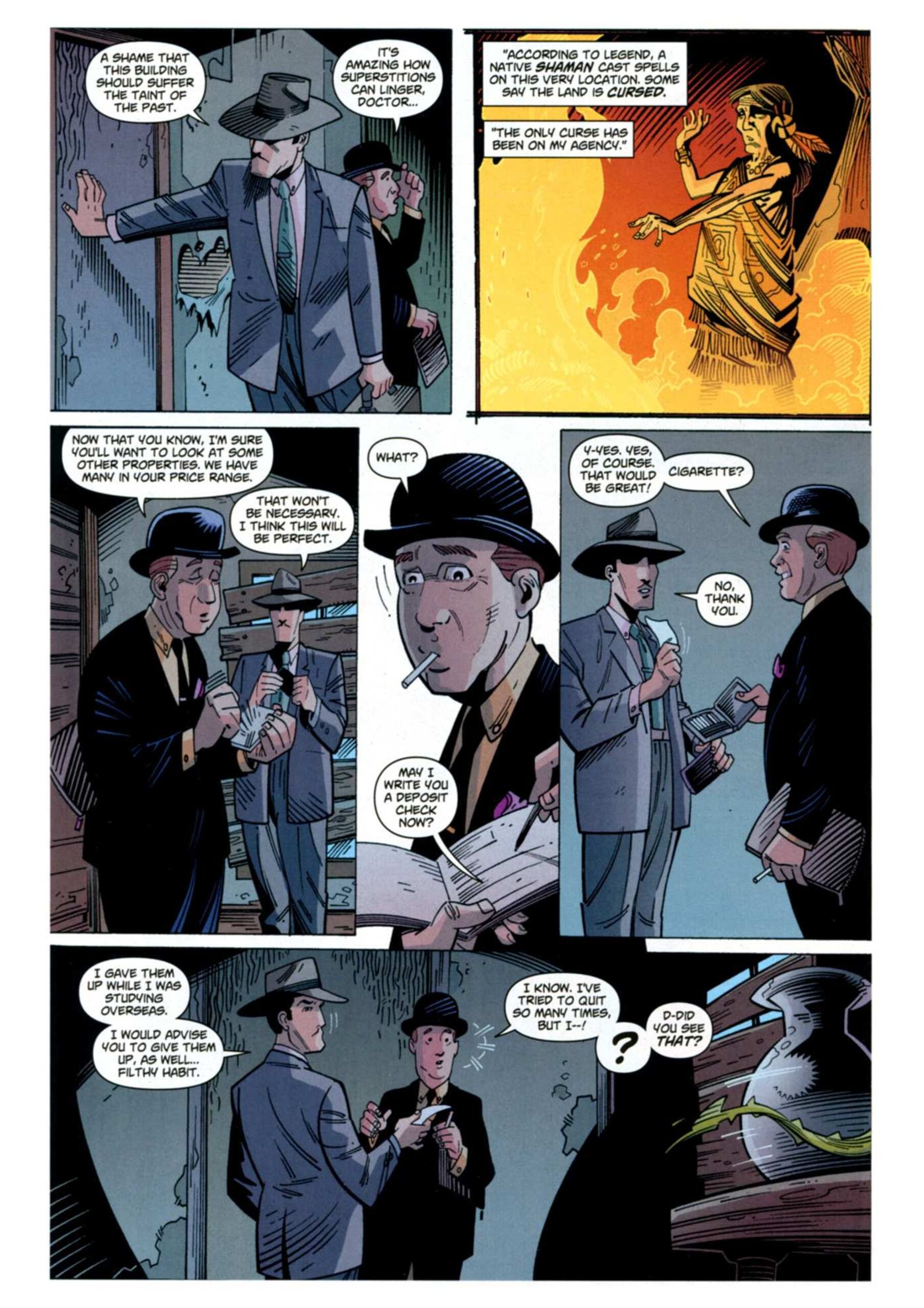

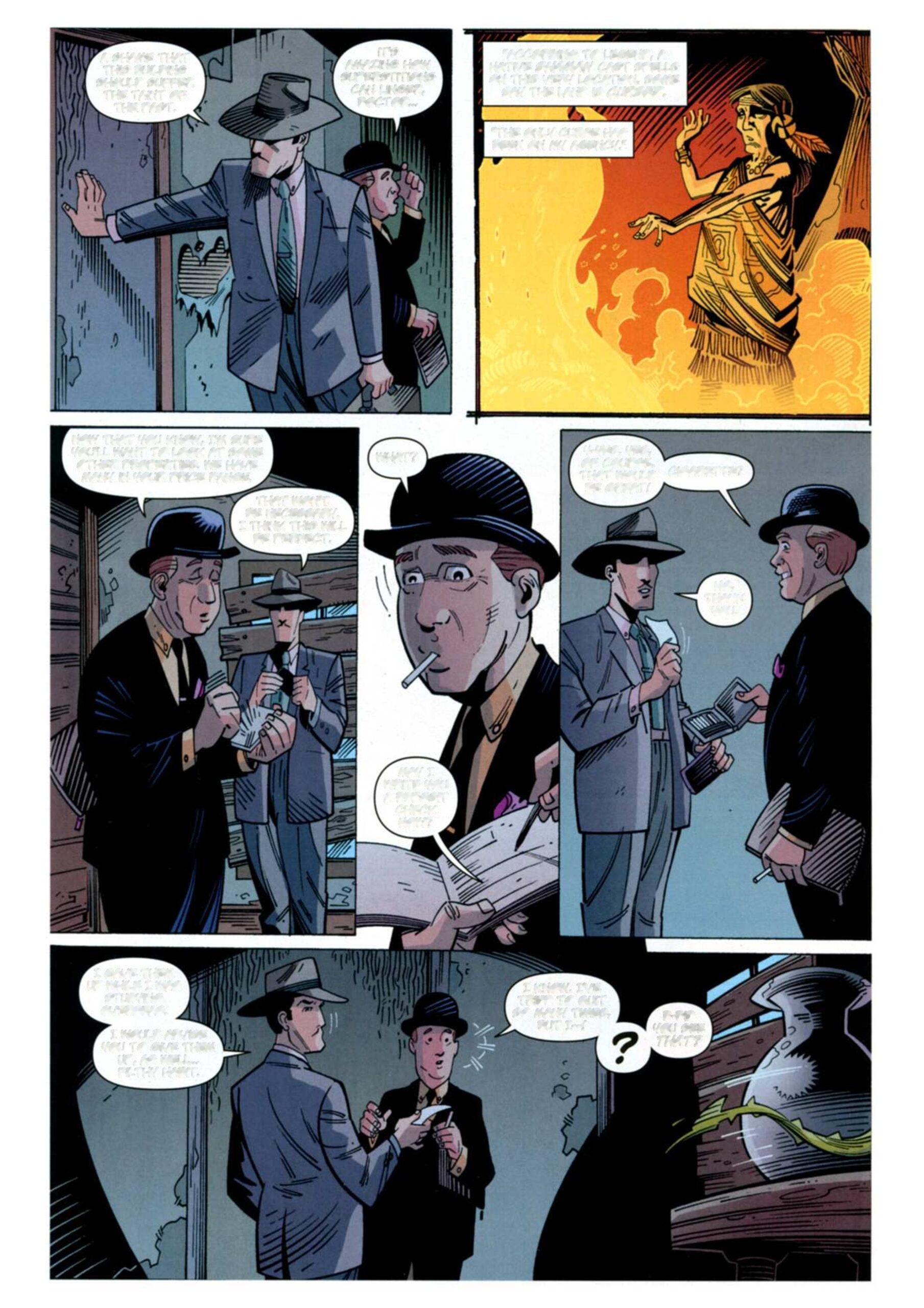

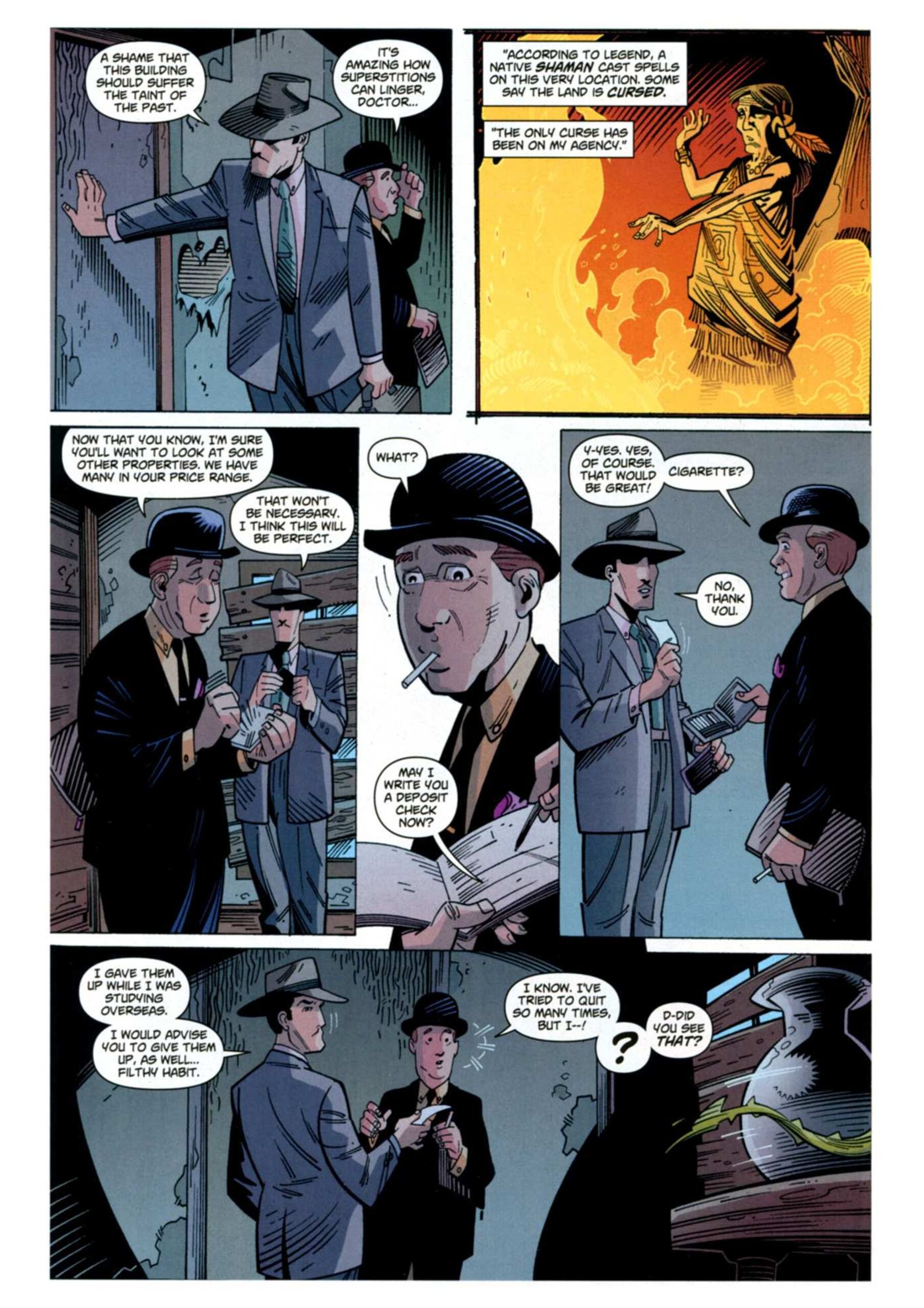

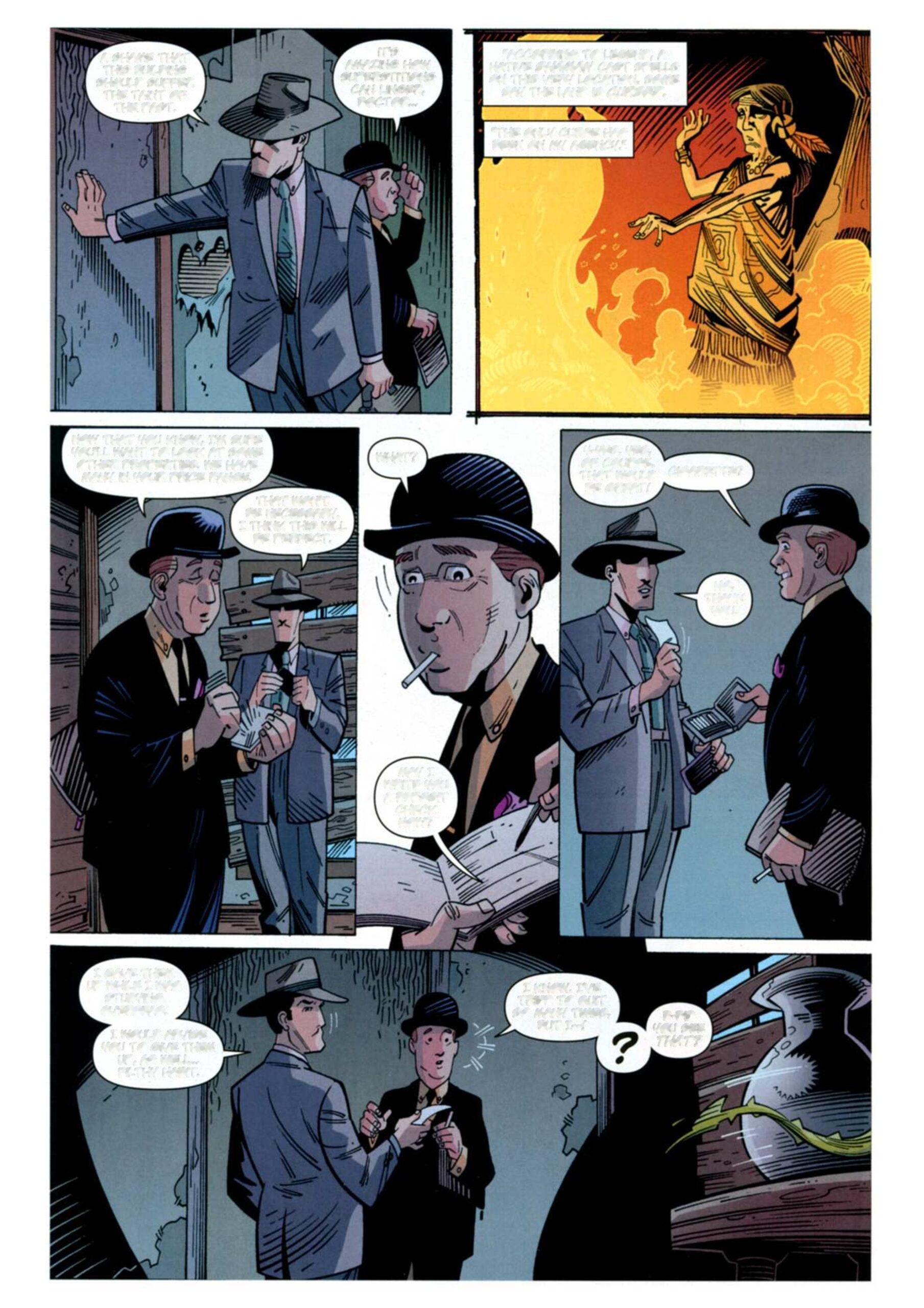

This is the page we are going to translate,

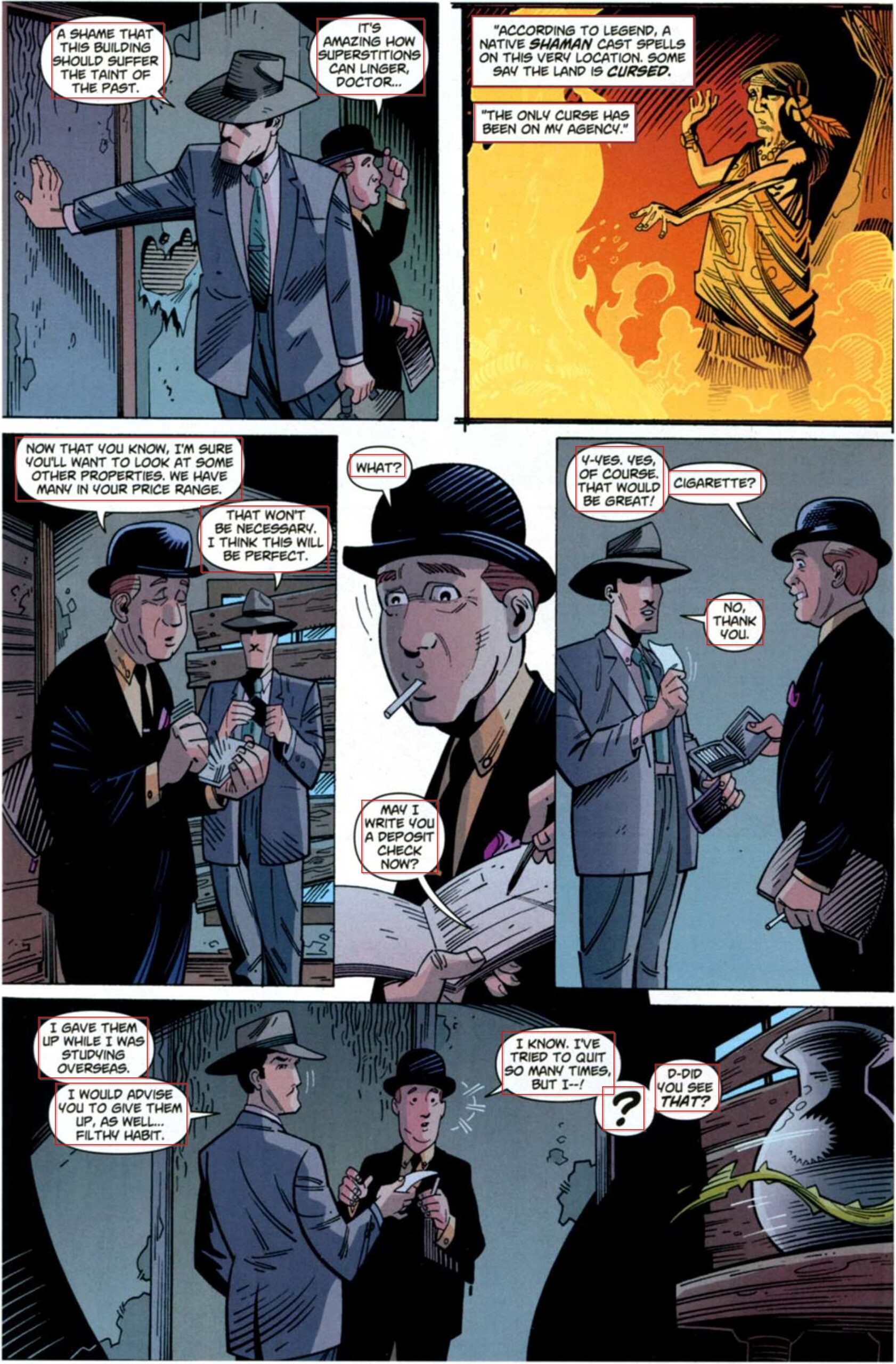

Step 1: Detection of text, conversation: This involves passing the image through a deep learning model to identify all the balloons in it.

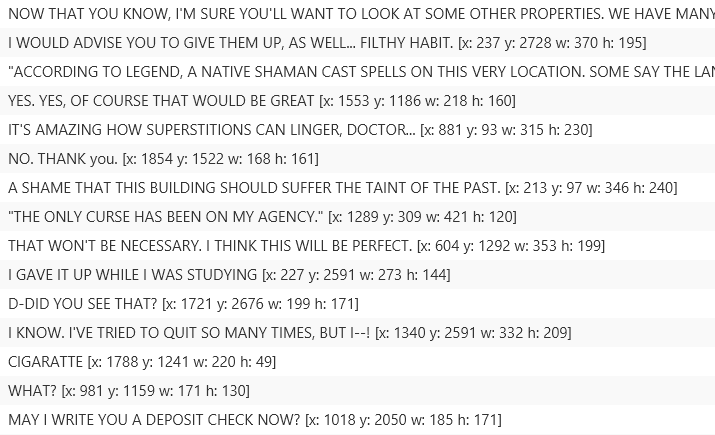

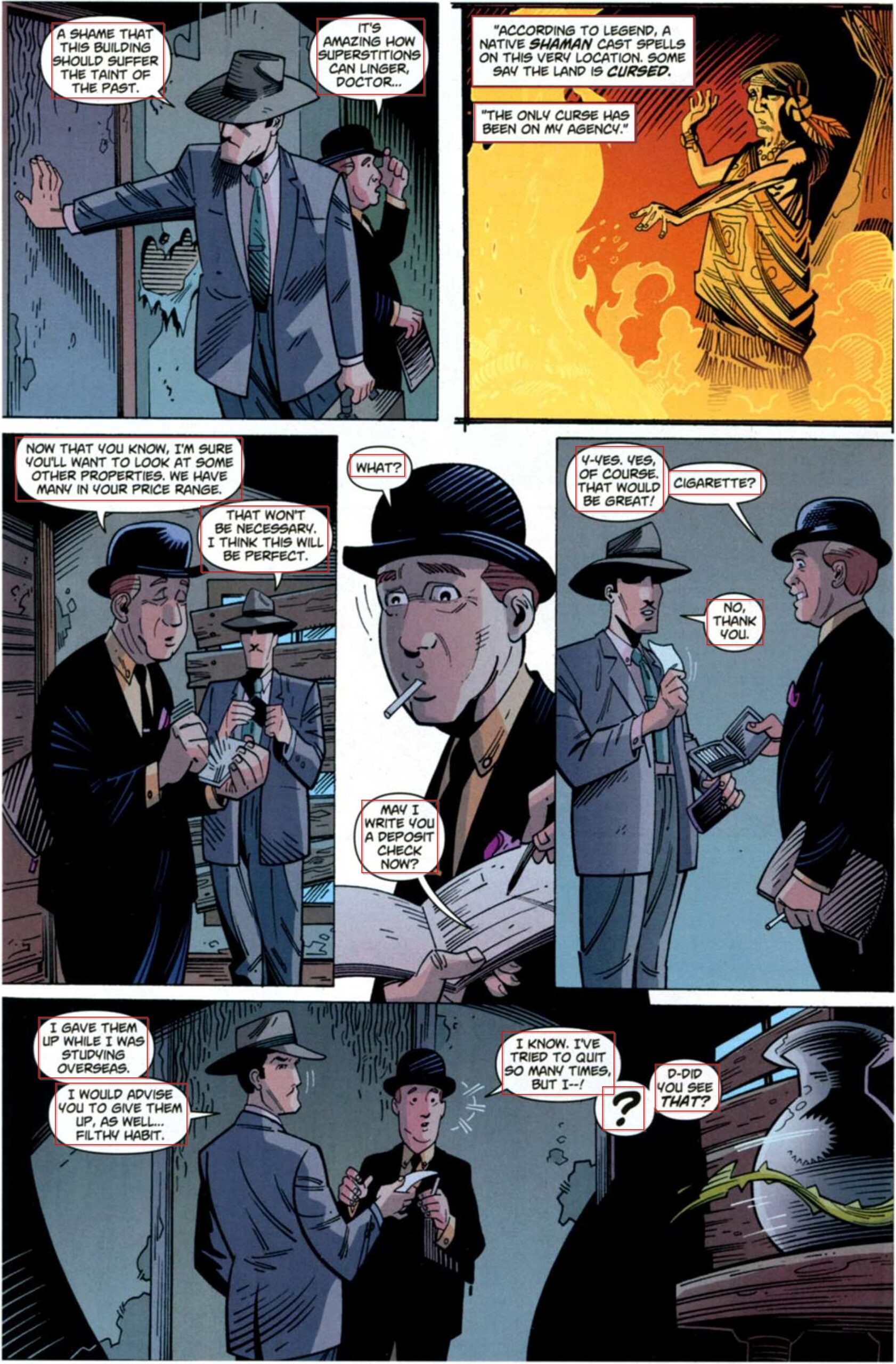

Step 2: OCRing, So we have the location of all text boxes with us. So we will take each of them and pass through it via an OCR. The quality of OCR depends on the quality of the source image, we can use Tesseract, Abby, Google or any OCR as needed.

So we will get something like this,

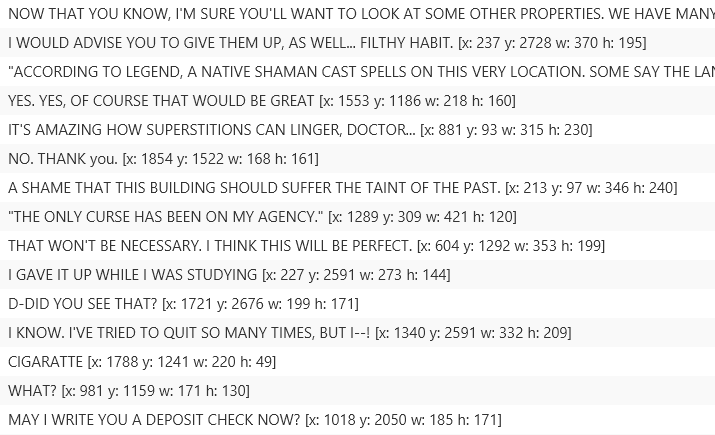

Step 3: Translation is the next step. We can use any kind of language translators available for translation.

At this point, the translation may not be perfect. So we cannot fully depend on a translator and s we need to tune it or we should create our own translation model for each type of comic and then do the translation. Different comics use different own kind of dialogues/phrases and content delivery, like for Amar Chitra Katha the English’s content will be different and for Marvel comics it will be exactly different. So we cannot convert them based on any available conversational models for a professional output, but automated translation works for the time being.

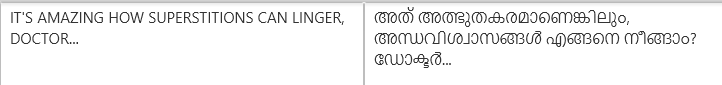

Step 4: Text Masking: So now we know where are out texts, so the next step is to mask all the texts in it. This uses AI models to detect text in the balloons that we detected, and remove them. OR just clear all the balloons which may not be perfect since balloons can be of any shape.

Step 5: Replace original text, we can now paste our translated contents over this bubbles.

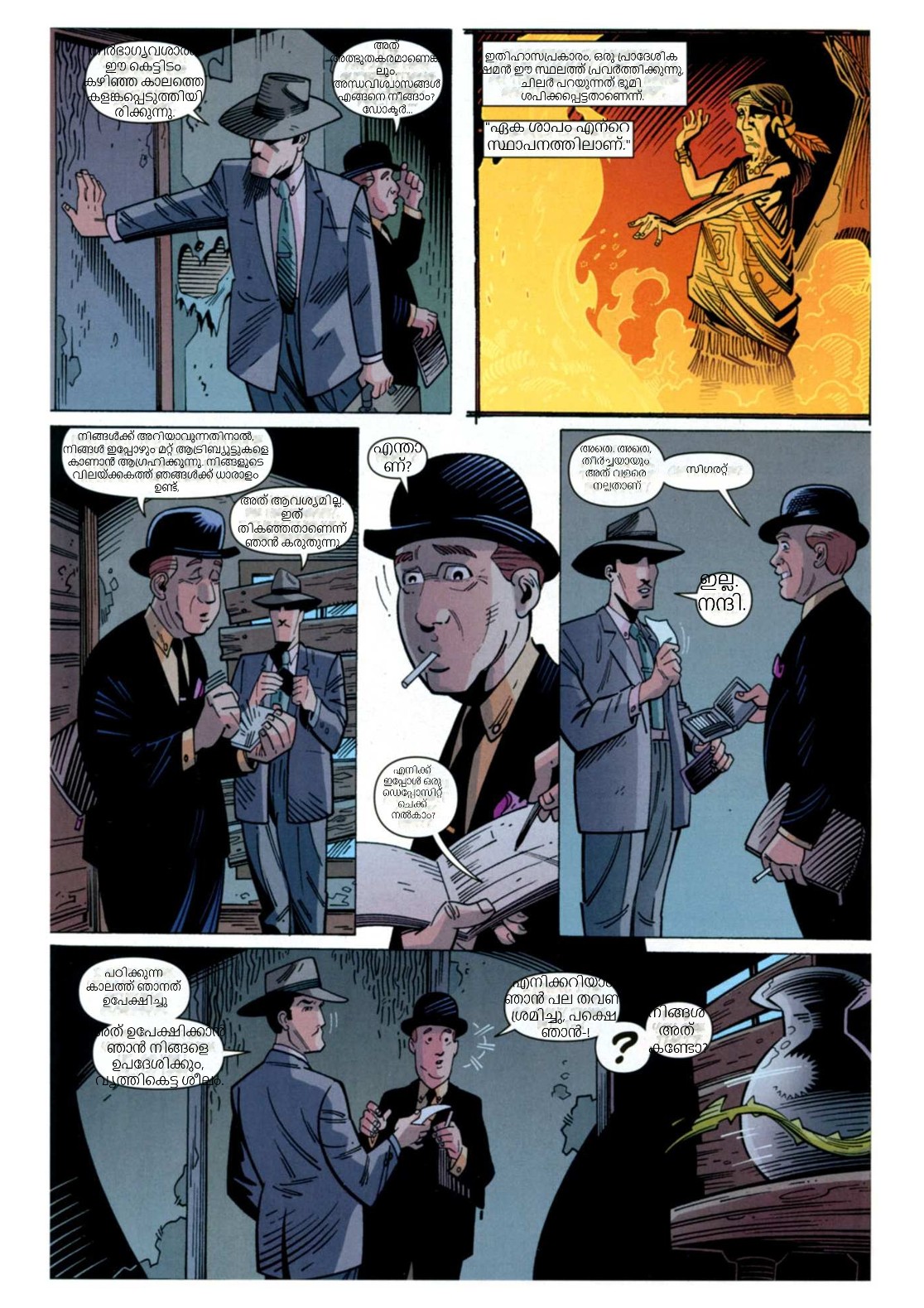

So yes we have the comic ready.

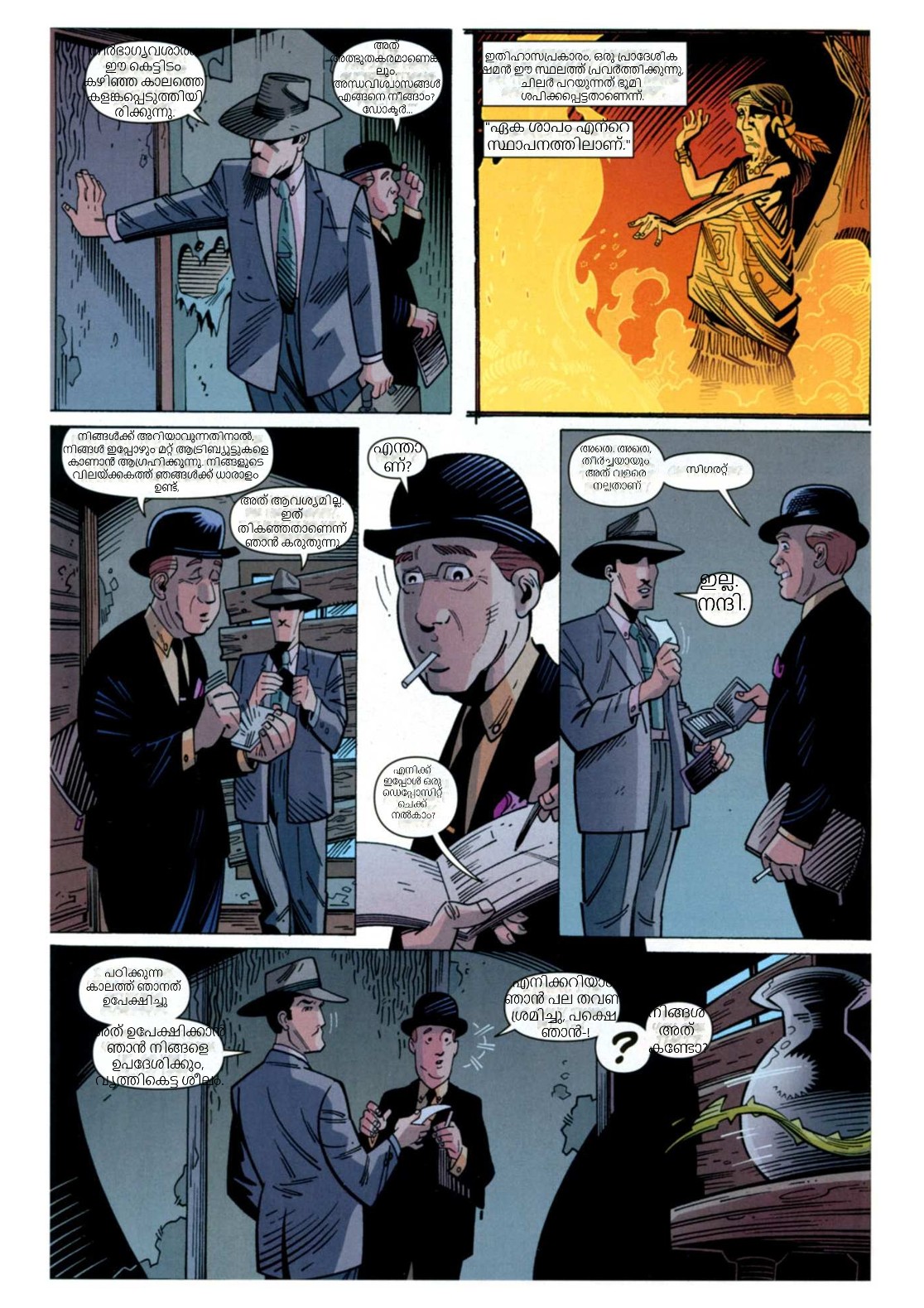

Here I’m adding the converted comic (Just a couple of pages only.)

Original: Dr. Strange from Marvel

Translated: Dr. Strange From Marvel – Malayalam Translated

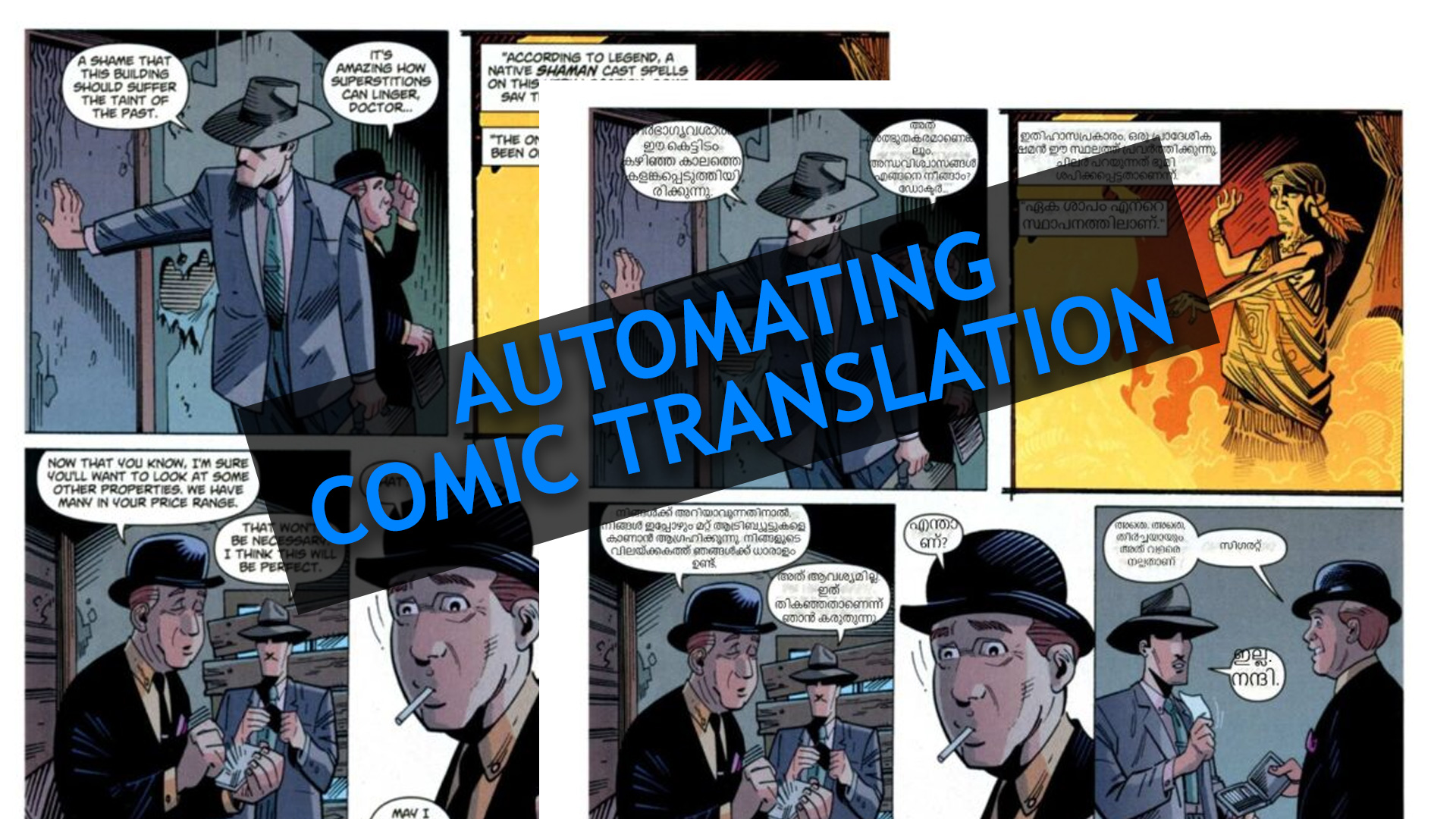

Here is one more,

This I did in a hurry (ignore the imperfect mask removal), but it does the job.

Original: Arjun Unicrystal

Translated: Arjun – Unicrystal – Malayalam Translated

So if you want to convert any comics can P.M me, all we need is a person (manual translator) who can verify the automatic translated content and do the correction.

Thanks for reading.